Let's Implement: Context Window Compression That Could Expand AI Conversations by 10x

The Problem: Hidden Limitations in Today's AI Interfaces

Every power user of AI assistants has experienced that frustrating moment: you're deep in a productive flow, making real progress on a complex problem, when suddenly—"Context window exceeded." Just as the AI has finally accumulated enough understanding of your project to be truly helpful, you're forced to start over.

Context windows are one of the most critical constraints in AI interaction, yet current interfaces offer users almost no visibility or control over this resource.

A Product Manager's Approach to Context Optimization

Looking at this through a product lens, there's a clear opportunity to dramatically improve user experience without waiting for the next generation of models. By borrowing proven concepts from software development, we could expand effective context window utilization by 10x or more in common scenarios.

1. Differential Storage for Iterative Content

Current Implementation: Each message stores complete content, even when 90% is identical to previous messages.

Proposed Solution: Implement git-inspired differential storage that only captures changes between iterations.

Example Use Case:

When iteratively drafting an email with an AI assistant:

Dear Team,

I hope this email finds you well. I wanted to provide a quick update on our progress for the month of March.

[...entire email content...]

If a user makes a single addition and sends it back, the system would store:

Reference to original message (tiny token cost)

Only the specific changes (minimal token cost)

This approach mirrors how humans process information—we don't store duplicate memories of identical content, only noting what's changed or new.

2. Transforming the Developer Experience

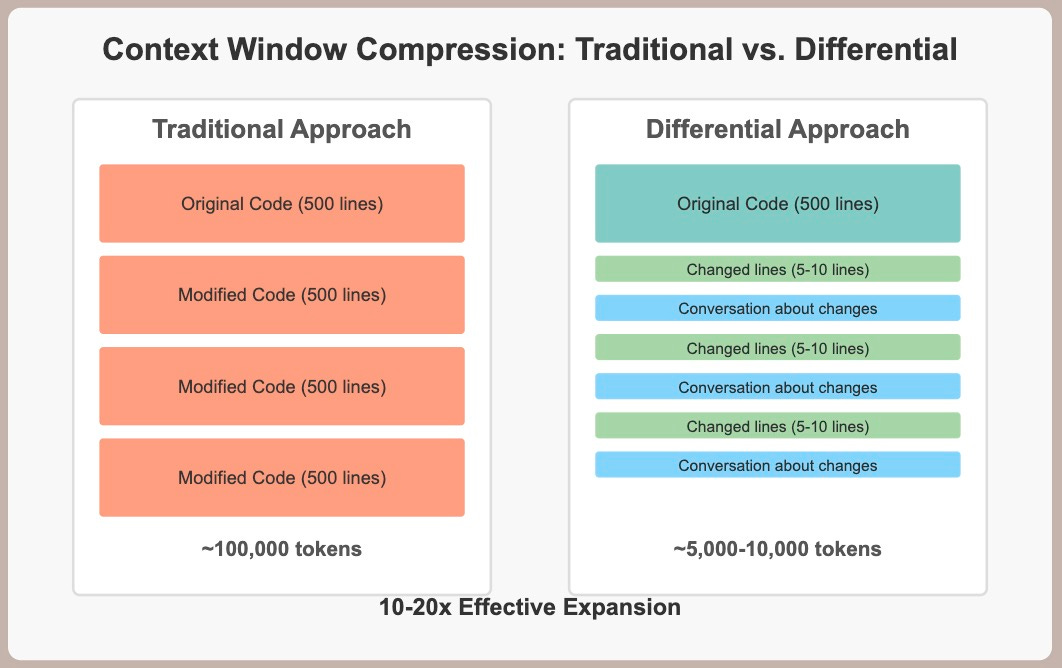

This approach would be particularly valuable for coding scenarios, potentially creating a 20x expansion of effective context for technical conversations.

Consider a typical development workflow:

Developer shares 500-line codebase

AI suggests targeted improvements

Developer implements changes and shares updated code

This cycle repeats throughout development

Currently, that 500-line file gets stored in the context window repeatedly, quickly exhausting the token budget. With differential compression:

Original codebase: stored once

Each iteration: only changed lines + conversation about changes

The impact on productivity could be transformative:

Complex refactoring projects completed in single sessions

Debugging workflows that maintain full system context

Architectural decisions with complete historical context available

Business Impact and Product Vision

Implementing context compression wouldn't just improve technical metrics—it would transform how users experience AI assistants:

Improved User Satisfaction: Eliminating the frustrating "start over" experience

Higher Completion Rates: Complex tasks finished in single sessions instead of abandoned

Reduced Support Costs: Fewer support tickets around context limits

Competitive Advantage: Delivering effectively "larger" context windows without increased computing costs

As AI tools become more central to knowledge work, the platforms that optimize for real user workflows—not just raw model capabilities—will ultimately win the market.

Next Steps

This is the first in a series exploring practical enhancements to AI interfaces that could dramatically improve user experience with relatively modest engineering investment.