Let's implement: Context Management for AI Conversations: A User Interface Design

Making context management as a user-controlled feature

The Problem: The Invisible Wall in AI Conversations

When chatting with AI assistants, users eventually hit context limits without realizing it. We usually get the message “1 message left” - and eventually our most meaningful chats grow out of reach. That’s heartbreaking. Here is a quick little tool that could be implemented.

Making context management a user-controlled feature

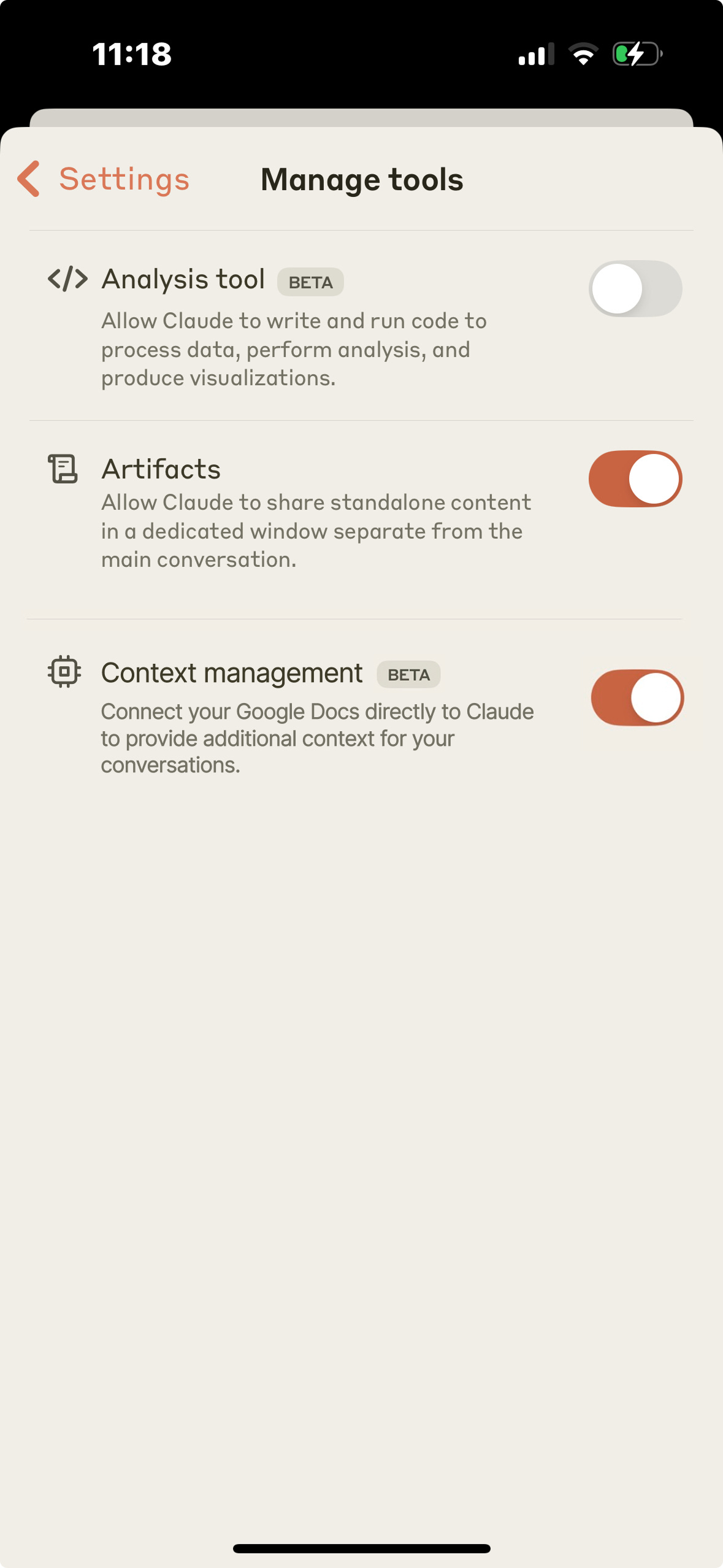

Context management shouldn't be a black box. By making it visible and controllable, users can make informed decisions about their conversation history and get the most out of their AI interactions.

Context management can be enabled in settings alongside other tools, giving users control over how their conversation history is managed.

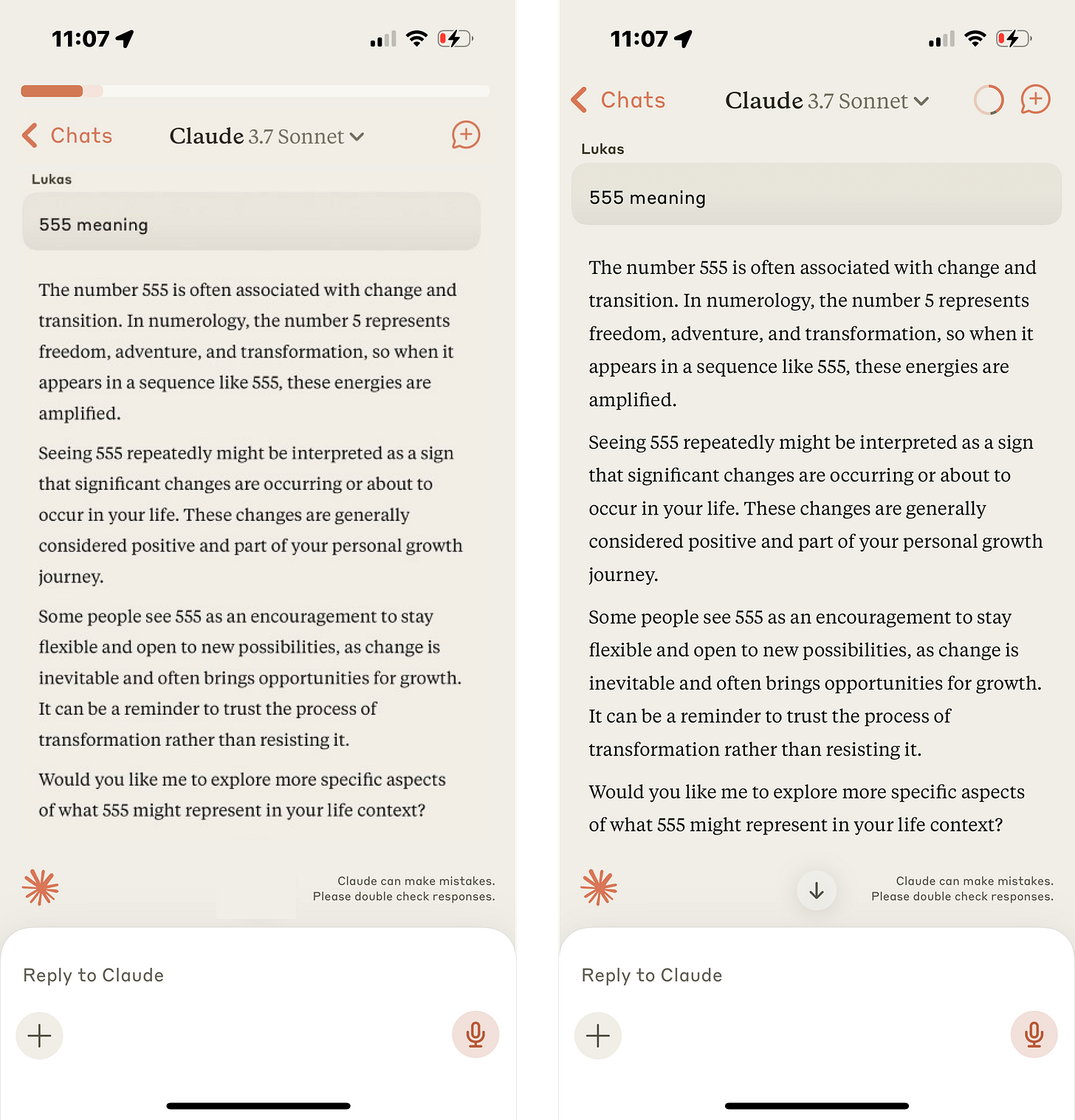

Bar vs Circle: Two Visual Approaches

Both designs visualize the same critical information about context window usage:

Orange: Shows how much context window is getting used up

Beige: Shows estimated token cost for next message

White: Shows when chat will run out of context window

The bar-version is more intuitive and can be displayed on scroll only, while the circle diagram is more space efficient but not as immediately clear to users.

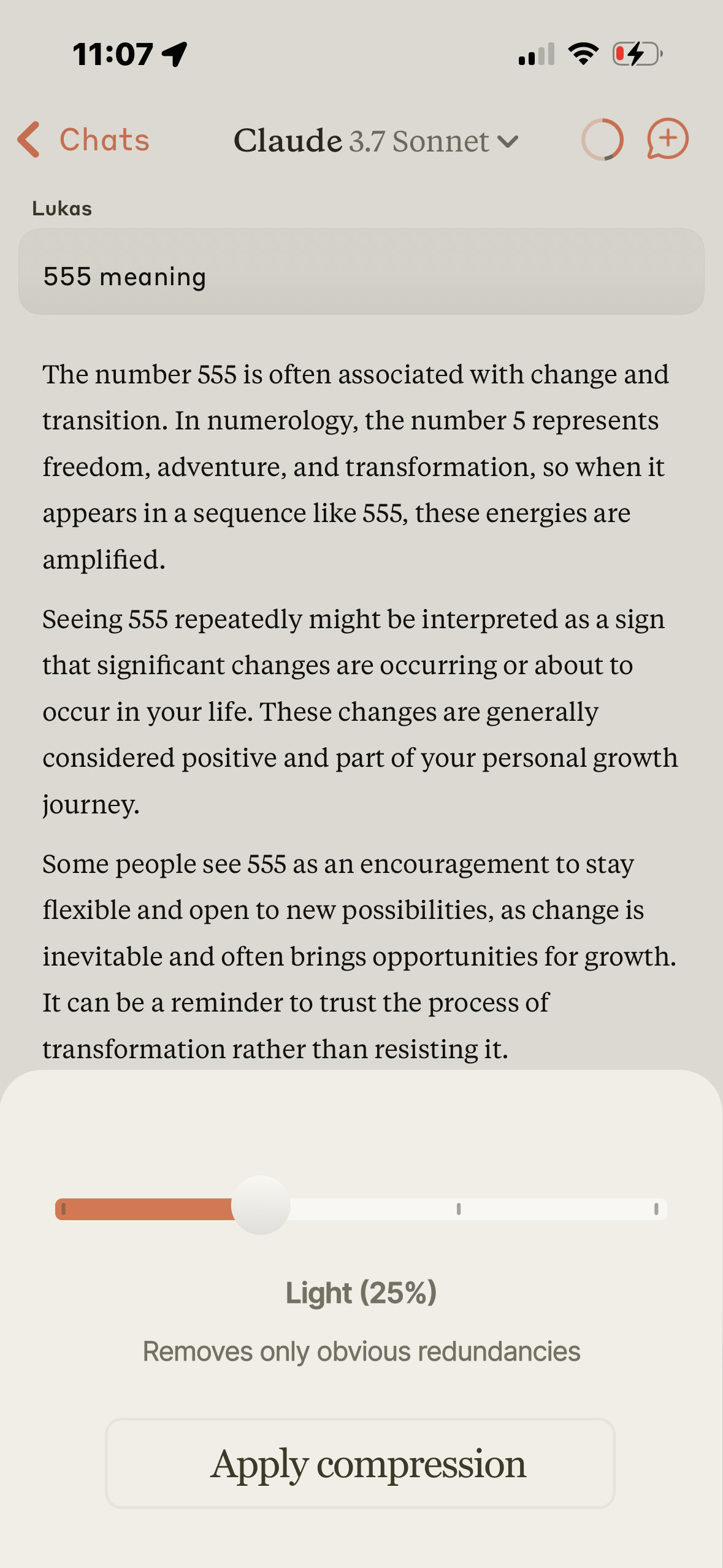

User Control Through Compression Settings

Tapping either indicator reveals compression settings:

Light (25%): Removes only obvious redundancies

Medium (50%): Condenses paragraphs while preserving key points

High (75%): Summarizes entire exchanges into core concepts

Max (100%): Distills to essential information only

This gives users a spectrum of options from minimal intervention to aggressive summarization, with clear explanations of what each level does.

Why This Matters

User Empowerment: Users understand why AI performance might change over time and can take action before problems occur

Conversation Quality: Helps maintain high-quality interactions even in lengthy sessions

Technical Education: Subtly teaches users about how large language models work

Great addition for power users

This feature addresses the gap between how AI systems actually function and users' mental models of them. By making the invisible visible, we help users develop better intuitions about working with AI and avoid common frustrations.